Johnson was a math major from Trinity University in San Antonio, TX. In his early twenties while playing for the Baltimore Orioles, Johnson was writing simulations of the Orioles batting order, and he thought the 1969 lineup that was used most often was the sixth worst possible lineup (baseballhall.org/discover-more/news/johnson-davey). He was unable to convince manager Earl Weaver that he should bat second in the lineup.

In 1984, his first year as Mets manager, he used dBase II to compile data on each opposing pitcher and each Mets hitting record against that pitcher. This is routine today, but it was innovative in 1984.

He was particularly interested in the statistic On-Base Percentage (OBP), which measures how often a batter reaches base, and thus creates scoring opportunities. It is more comprehensive than simple Batting Average, and it incorporates the adage, “A walk is (almost) as good as a hit.” OBP equals (Hits + Walks + Hit by Pitch) / (At Bats + Walks + Hit by Pitch + Sacrifice Flies). As a result of his analysis he decided star Mookie Wilson was not the optimal leadoff hitter, and Johnson replaced Wilson at leadoff with Wally Backman.

All the key Mets players improved their OBP in 1984 versus 1983 (except Hernandez who was over .400 in both yeara), but Backman's 1984 OPS of .360 was much higher than Wilson's .308, especially due to Backman's higher number of walks.

Johnson was the Mets manager from 1984 to early 1990, he won at least 90 games in each of his first five seasons, and finished first or second in the NL East all six years. The 1983 Mets had won only 68 games. Part of his success was due to the emergence of Darryl Strawberry and Dwight Gooden, and the acquisitions of Keith Hernandez and Gary Carter. Johnson was known for platooning his players, even his stars. It is debatable how many wins are due to the manager (sportslawblogger.com says at most 5 wins per season), but Johnson's managerial record with the Mets is formidable. His lifteime winning percentage including managerial stints elsewhere is .562.

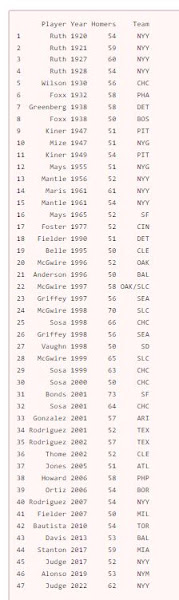

As a player Johnson was a second baseman from 1965 through 1978, most notably for the Baltimore Orioles and the Atlanta Braves. In Baltimore he was part of a great defensive infield along with Brooks Robinson and Mark Belanger. In Atlanta, as part of a lineup including Hank Aaron and Darrell Evans, he had a season with 43 home runs (42 as second baseman, plus 1 as pinch hitter), tying Rogers Hornsby’s record for most home runs by a second baseman.

Johnson's player statistics are as follows. They are not Hall of Fame calibre, but they are pretty good:

Billy Beane, the subject of the book Moneyball that popularized sabermetrics, played five games for Johnson's 1984 Mets and eight games for Johnson's 1985 Mets. You have to wonder how much Beane was influenced by Johnson.

The R code is as follows:

library(Lahman)

library(tidyverse)

library(ggplot2)

data(Batting)

data(People)

data(Teams)

common_theme <- theme(

legend.position="right",

plot.title = element_text(size=15, face="bold"),

plot.subtitle = element_text(size=12.5, face="bold"),

axis.title = element_text(size=15, face="bold"),

axis.text = element_text(size=15, face="bold"),

axis.text.x = element_text(angle = 45, hjust = 1),

legend.title = element_text(size=15, face="bold"),

legend.text = element_text(size=15, face="bold"))

# Mets key hitters 1983, 1984:

stars <- c("Backman", "Brooks", "Hernandez", "Foster", "Strawberry", "Wilson")

df <- Batting %>%

filter((yearID == 1983 | yearID == 1984) & teamID == "NYN") %>%

left_join(People, by = "playerID") %>%

filter(nameLast %in% stars | (nameLast == "Hernandez" & nameFirst == "Keith")) %>%

mutate(

BA = round(H/AB,3),

OBP = round((H + BB + HBP) / (AB + BB + HBP + SF),3)

) %>%

select(nameFirst, nameLast, yearID, H, BB, HBP, AB, SF, BA, OBP) %>%

arrange(yearID, desc(OBP))

ggplot(df, aes(x = nameLast, y = OBP, group = factor(yearID), fill = factor(yearID))) +

geom_bar(stat = "identity", position = "dodge", width = .9) +

scale_fill_manual(values = c("1983" = "red", "1984" = "blue")) +

labs(title = "Mets Key Player OBP 1983, 1984",

x = "Player",

y = "On Base Percentage",

fill = "Year") +

common_theme

# NY Mets number of wins

mets_records <- Teams %>%

filter(teamID == "NYN", yearID %in% seq(from = 1982, to = 1989, by = 1)) %>%

mutate(

BA = round(H/AB,3),

OBP = round((H + BB + HBP) / (AB + BB + HBP + SF),3)

) %>%

select(yearID, W, L, H, HR, BA, OBP)

print(mets_records)

mets_records$mgr <- ifelse(mets_records$yearID == 1982 | mets_records$yearID == 1983, "Not Davey", "Davey")

mets_records$mgr <- factor(mets_records$mgr, levels = c("Not Davey", "Davey"))

ggplot(mets_records, aes(x = yearID, y = W, group = factor(mgr), fill = factor(mgr))) +

geom_bar(stat = "identity") +

scale_fill_manual(values = c("Not Davey" = "red", "Davey" = "blue")) +

labs(title = "Mets Number of Wins by Year",

x = "Year",

y = "Number of Wins") +

fill = "Manager") +

common_theme

# find Davey Johnson in People:

People %>% filter(nameFirst == "Davey" & nameLast == "Johnson")

# his People %>% filter(nameFirst == "Davey" & nameLast == "Johnson")

# his playerID is johnsda02

df <- Batting %>% filter(playerID == "johnsda02") %>%

left_join(People, by = "playerID") %>%

mutate(

BA = round(H/AB,3),

OBP = round((H + BB + HBP) / (AB + BB + HBP + SF),3)

) %>%

select(nameFirst, nameLast, yearID, teamID, H, BB, HBP, AB, SF, HR, BA, OBP)

# Calculate the totals row

totals_row <- df %>%

summarize(

nameFirst = "Totals",

nameLast = "",

yearID = NA_integer_, # NA is used for columns without a meaningful sum

H = sum(H),

BB = sum(BB),

HBP = sum(HBP),

AB = sum(AB),

SF = sum(SF),

HR = sum(HR),

BA = round(sum(H) / sum(AB), 3),

OBP = round(sum(H + BB + HBP) / sum(AB + BB + HBP + SF), 3)

)

df_with_totals <- bind_rows(df, totals_row)

print(df_with_totals)